What effect, if any, does a system’s CPU speed have on GPU inference with CUDA in llama.cpp?

What effect, if any, does a system’s CPU speed have on GPU inference with CUDA in llama.cpp?

How does the new Puget Mobile 17″ compare to the MacBook Pro M3 Max 16″ in performance across a variety of AI-powered workloads?

How does the choice of Operating System affect image generation performance in Stable Diffusion?

How does performance compare across a variety of consumer-grade GPUs in regard to SDXL LoRA training?

How does performance compare across a variety of professional-grade GPUs in regard to SDXL LoRA training?

AMD has published a guide outlining how to use Microsoft Olive for Stable Diffusion to get up to a 9.9x improvement in performance. But is that enough to catch up to NVIDIA?

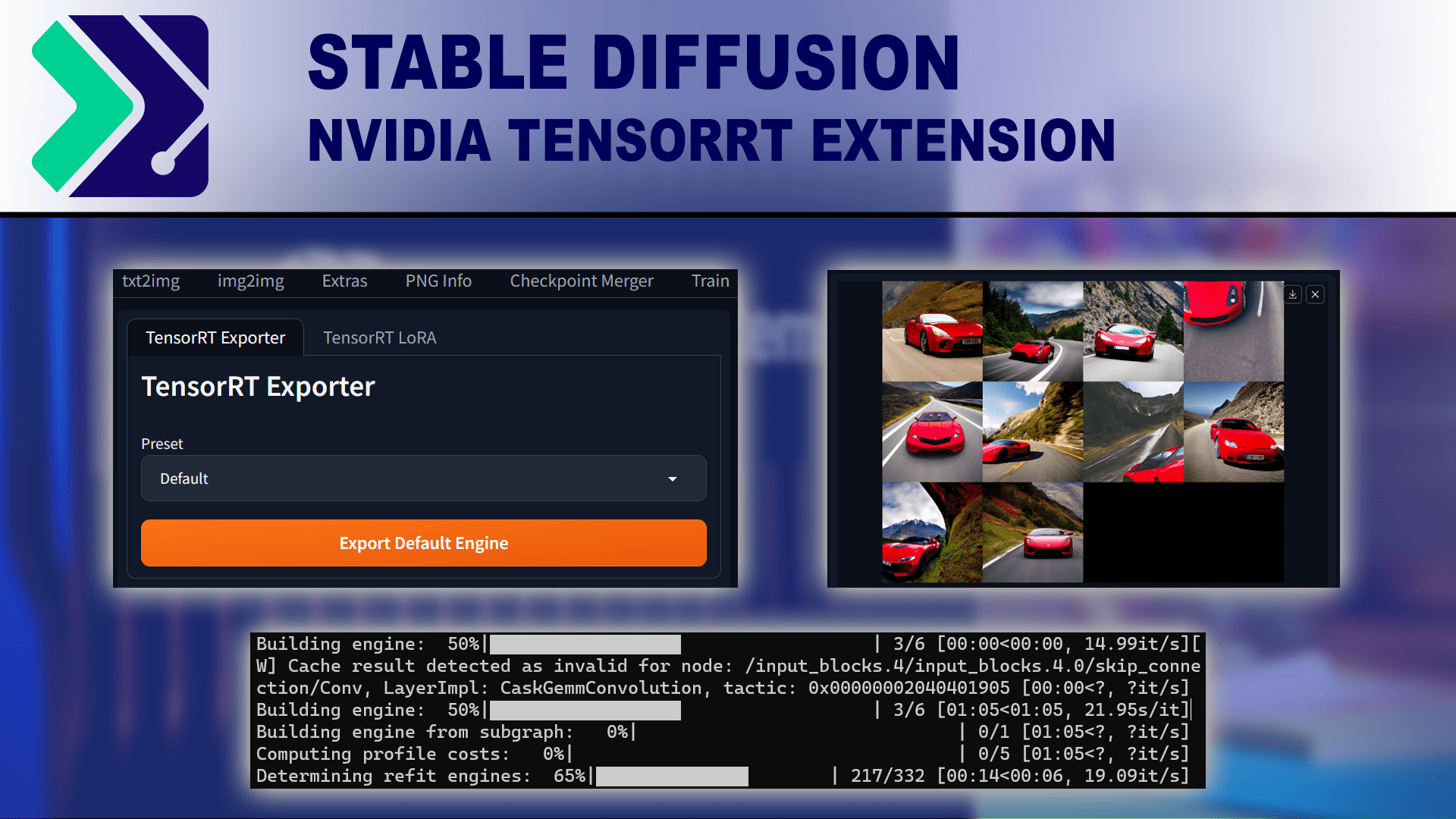

NVIDIA has released a TensorRT extension for Stable Diffusion using Automatic 1111, promising significant performance gains. But does it work as advertised?

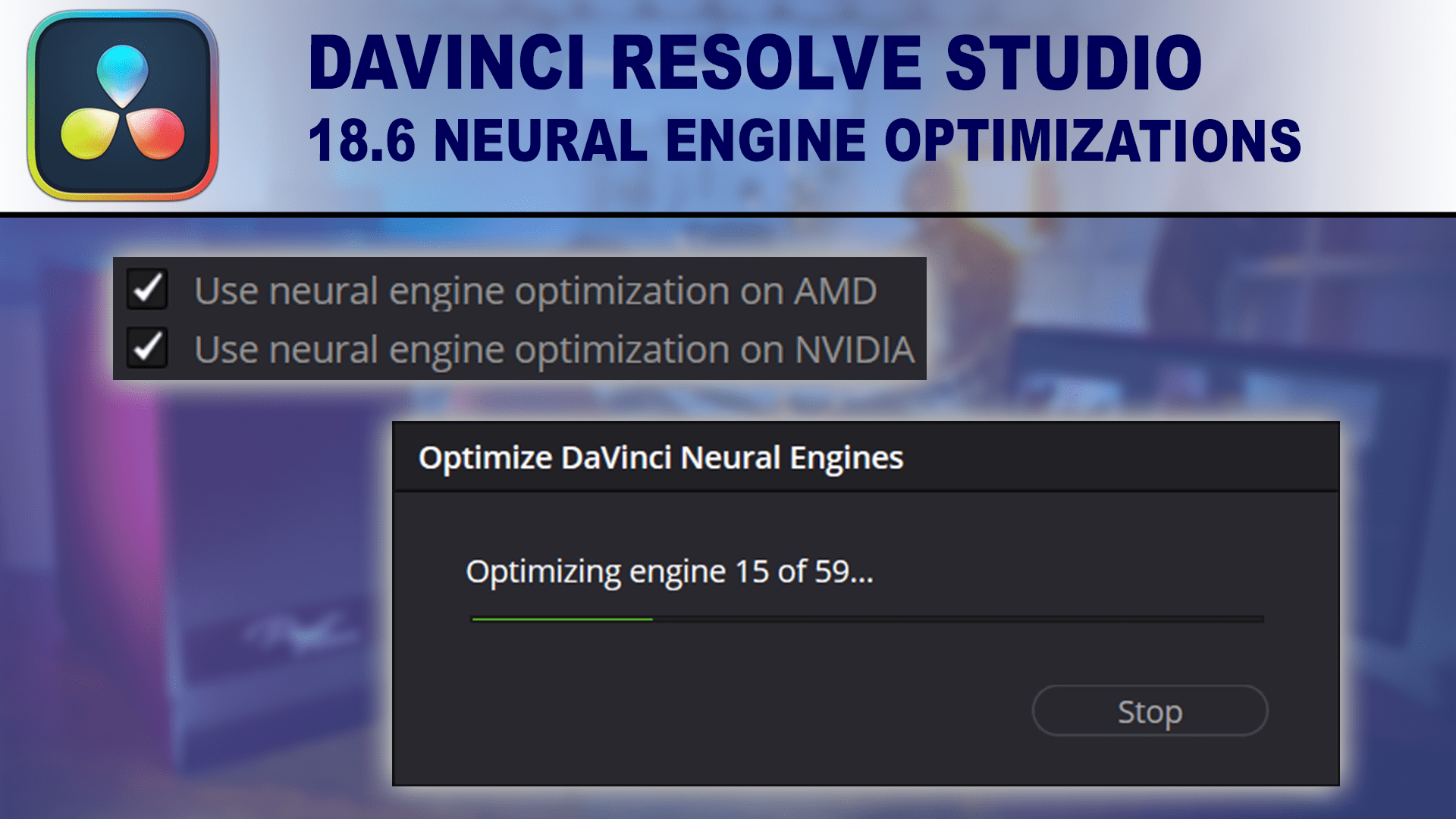

In DaVinci Resolve 18.6, Blackmagic is claiming up to a 4x improvement in Neural Engine performance for AMD GPUs, and a 2x improvement for NVIDIA. Is this a true claim, or a matter of cherry-picked results that won’t impact most users?

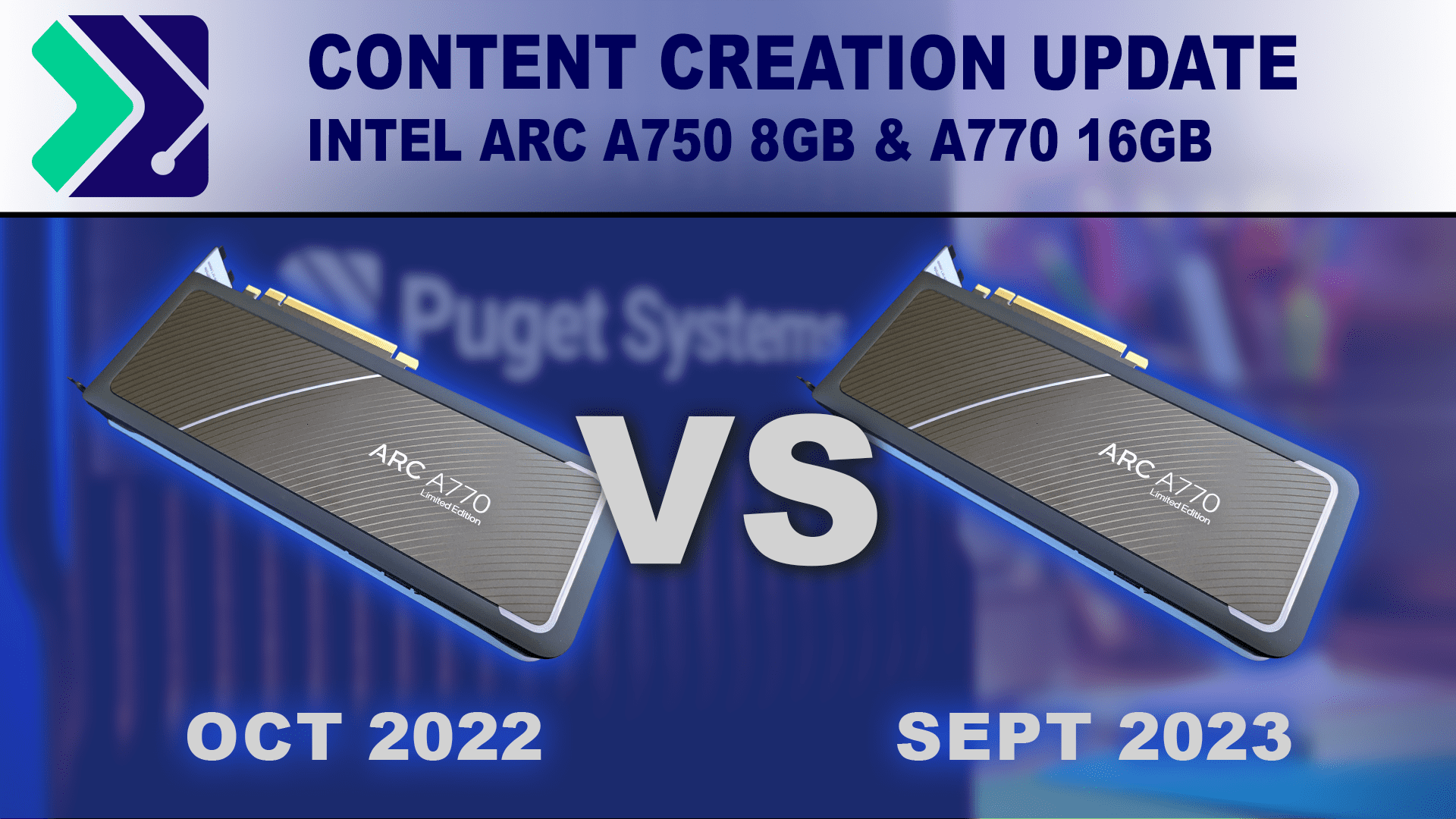

The Intel Arc Alchemist A770 and A750 launched 11 months ago. How have the Intel Arc A770 and A750 improved for content creation applications since then?

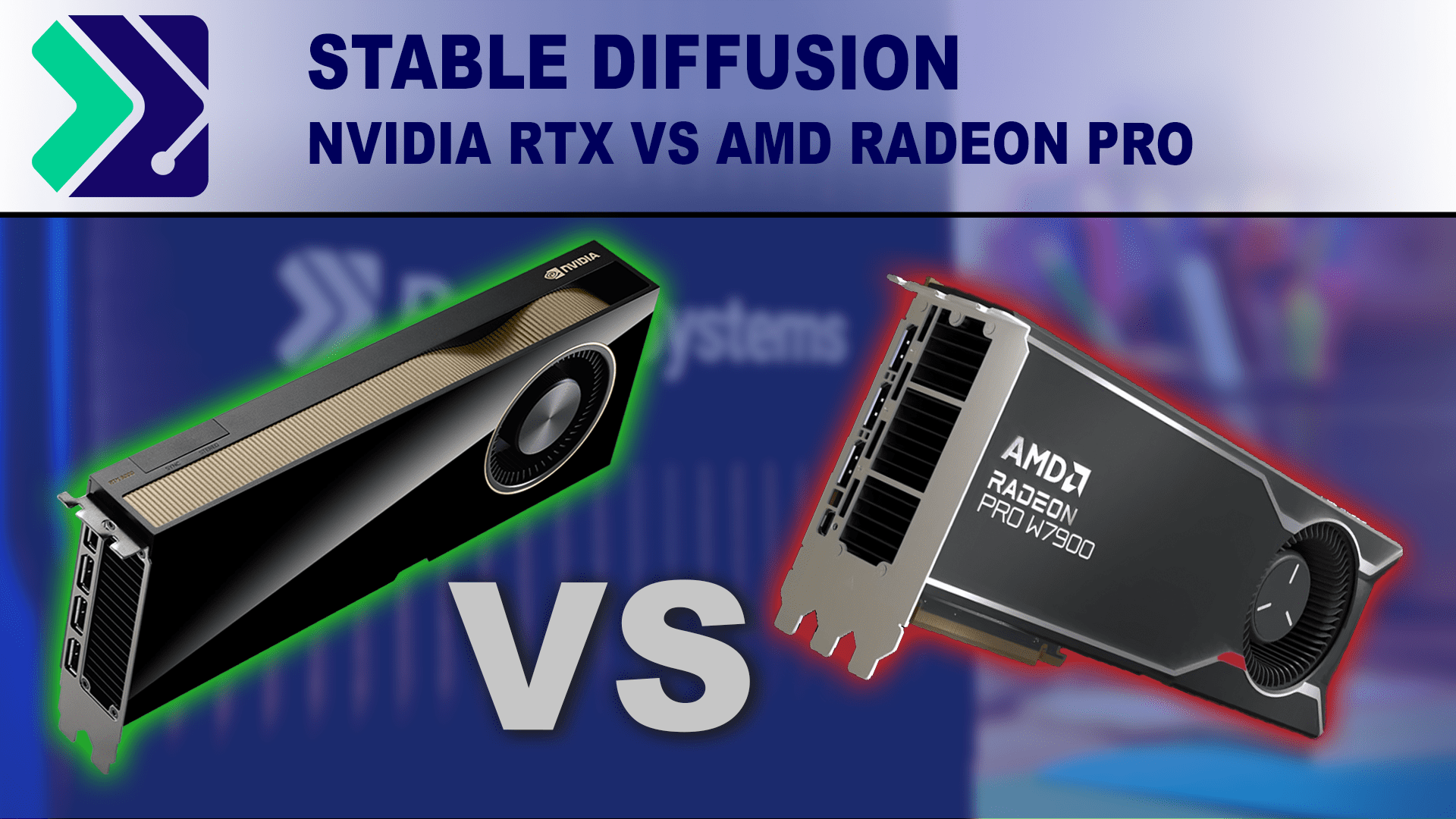

Stable Diffusion is seeing more use for professional content creation work. How do NVIDIA RTX and Radeon PRO cards compare in this workflow?